Blog Generative AI – A mighty accelerator

By Meagan Gentry / 14 Apr 2023 / Topics: Artificial Intelligence (AI) , Generative AI , Cloud , Analytics

Article originally published to LinkedIn in partnership with Women in Cloud.

By now, you have likely experimented with mainstream generative AI, whether it be ChatGPT or Dall-E, and found that it’s impressive, powerful and maybe even overwhelming.

Whether you’re a decision-maker in your organization or are just starting out, you’re likely asking questions about its potential role in your business: Should we dive in head-first or proceed with caution? What are our blind spots? How will we know if we are falling behind? What’s the best way to onboard ourselves and our clients?

When AI meets your customers or workforce

Generative AI has great potential to relieve the tedium in efforts like research and knowledge management, content creation and software development, just to name a few.

One of the most common applications of generative AI is to create highly intelligent conversational agents that are backed by a large knowledge base. For example, a pharmacy wants to provide round-the-clock ChatGPT service for their customers by allowing them to have a conversation about their symptoms, current medications and health context using common language, just as they would speak to their family physician.

An application like this raises important questions: What if the AI is wrong and gives bad advice? What if the AI leaks the wrong data to a patient and violates HIPAA policy? Will there even be a need for pharmacists and telemedicine in the future?

To gain control over the possibility of AI running wild, we should understand the realistic threats and probable opportunities of generative AI.

Over the past few weeks, I’ve heard several leaders say, “If you learn to use AI, you're less likely to be left behind.”

Those of us in technology are accountable for keeping our skills relevant, and we’re constantly evaluating the benefits — and the opportunity cost — of mastering new technology like ChatGPT for problem solving and Codex for speedier development. However, there is widespread concern that innovation like generative AI will rapidly displace human effort across all industries, resulting in major changes to the labor market, all because of the latest release of powerful AI models to mainstream.

On a positive note, generative AI could be a catalyst for better workforce operations and accessibility. A use case we’ve explored recently at Insight is being able to use AI speech generation for more accurate multilingual translation. There are similar uses for enablement of individuals with speech, auditory or visual disabilities.

Transparency, explicability and data security

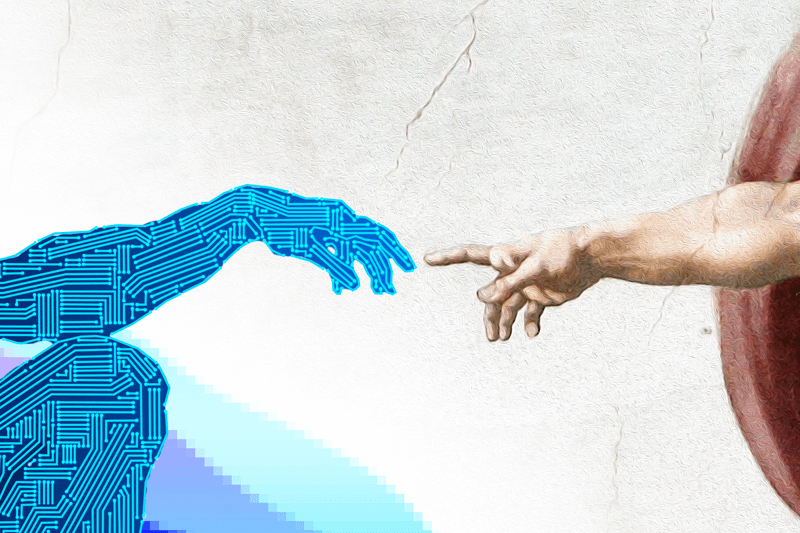

Generative AI didn’t arrive on the scene with a well-defined set of guardrails. As disruptive as our creations can be, humans also are capable of skillful stewardship of our inventions — we’ve been doing it for centuries. If opportunities to tune acceptable-use policies are identified early, and if those policies are implemented quickly, generative AI could be a net positive. But first, we need to learn how it works.

The more we understand how AI systems work, the more confident we can use AI without fear. Generative transformers like ChatGPT have been trained on the internet’s massive public corpora. This means that all sources of information (including false information and opinions) can influence AIs' responses to some degree.

The mainstream AI systems come with no guarantee that responses will be factual. You can think of generative AI model as that friend who believes everything they read on the internet — sometimes helpful and usually entertaining but take what it says with a grain of salt.

AI users should verify before trusting output and challenge AI recommendations. We typically challenge recommendations from our friends, family, financial advisors and real estate agents: When a recommendation doesn’t quite add up, we ask why.

So, what’s the problem with asking why an AI system produced an odd or unexpected response? The problem is that AI systems are notoriously difficult to explain because of the advanced mathematics “under the hood.” At Insight, we are leaning into Explainable AI (XAI) to help us connect our clients to their AI systems. XAI is an emerging related discipline of responsible AI that focuses on developing tools to use common language to explain the real-world relationships between sophisticated algorithms and their outputs.

In addition to explicability, our clients are interested in data security, specifically retention policies of prompts — the user inputs to the AI system. Businesses are quickly discovering how vulnerable their employees may be to the temptation of exposing sensitive information to a public endpoint. As businesses look to alternatives like secure-deployments ChatGPT, they are looking to partners like Insight to help them navigate the security and implementation challenges and define data retention needs.

Is this the end of creativity?

We can now use the image generation model DALL-E to generate visual content for artistic motivations, marketing copy or product design.

While this new capability raises plenty of understandable questions about creative property and market impact, using technology to enhance or expedite creativity is not new at all. Today, some of us use iPads to create art with a stylus. Before the era of digital art, artists endured all sorts of “analog challenges,” including purchasing paint and paintbrushes, managing humidity and light, and coordinating transportation for sales and gallery shows (instead of listing online).

The beauty of innovation is that it often creates space for variety: Oil painting didn’t disappear when the tablet was introduced, and AI art doesn’t have to eclipse intrinsically valued human-crafted art.

No matter your industry, you may soon be using generative AI to visualize and diagram important meetings, collaboration and thought processes. Wouldn't it be nice if, as someone was speaking, there was a diagram or framework so that you could see the thoughts visually represented? Creative conversations about a product design or complicated explanations of a process flow will be dynamically visualized without having a PowerPoint or Visio artist in the background, sorting out the details.

Whether you are concerned about impact to workforce, security and transparency — or on the purely human side … creativity — my hope is that you approach AI advancements with caution and thoughtfulness instead of fear. This early in the introduction of generative AI, many of us will find that leaders are open to influence like they never have been before in this new territory. For each warning sign ahead, there is a matching beneficial use case to be built.

What will you build with generative AI and how will you usher it into adoption responsibly?