Applying anticipatory design to meet user needs

Software most commonly uses pass/fail logic and loops ("for," "while" and "if" statements). The interface presents info to the user, but technology can’t gauge the user’s reaction, confusion or skill level in regard to what's presented. It can shoot them a few errors afterward, at best.

The challenge for building software is that the computer is not smart enough to know when the user is going off the rails without planning. That’s where anticipatory design comes in — a process where you meet user needs by streamlining the number of steps involved to provide the user what they need before the need is communicated.

Isn’t it interesting that most companies still have customer service representatives to help people use their website or app? And that the person will talk through each screen with the person, and even demonstrate through screen sharing how to do it?

It speaks volumes that reading a large screen of information isn't how people naturally make decisions, but talking to someone back and forth and watching them do it are very familiar, even to people who aren't technical at all. Great product teams and UX practitioners watch real people and engage customer service reps when available rather than making assumptions about how people will use something.

In the future, when someone uses technology, artificial intelligence could seek to understand the user’s questions and familiarity and even customize a dynamic user experience for them — and use more of these human communication-based cues, such as live communication.

Understanding each user's unique challenges

In order to create dynamic experiences, you have to understand where one user may excel and another might get stuck. Most products have to accommodate a variety of technical levels, but few do this well. During persona development, see if you can add some contextual shadowing sessions to see your users in the scenarios you’re designing for. Then:

- Create a workflow for your power user to quickly make his or her selections.

- Create a workflow for a user who doesn’t understand the subject matter well and can’t input the information without assistance or doesn’t understand why/if he or she would need to enter it.

Where can business and design do the work?

Once your use cases are thought through and documented, think about shortcuts and how information could be reduced to create a more dynamic experience:

- If you provide one piece of information, consider what other information could be drawn from it and prefilled.

- If a use case doesn’t have an option for item X, this scenario information should not be presented.

- Users should only make decisions when there’s an impact; don’t make people think or do duplicate data entry (when they shouldn’t have to).

True equal access

You can’t talk about software for humans without accessibility.

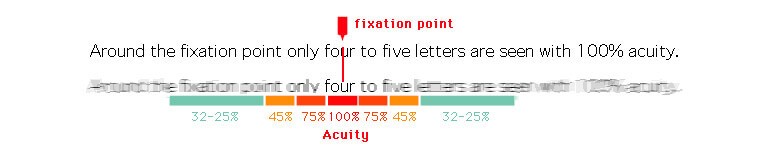

Current technology mainly relies on human vision with occasional audio cues, but most software and web experiences don’t include audio at all. Touch and gestures are a bit of a misnomer in our biological context because many touch devices’ apps still rely on visuals (it’s a flat, non-tactile piece of glass) and gesture interactions are learned for each device, not a natural part of human society.

Almost all interface content is designed primarily for seeing, hearing and non-learning-impaired people. Unfortunately, in many circles, complying with the Americans With Disabilities Act (ADA) on the business side is more about avoiding lawsuits than truly creating equal access. I hope in the future, interpretive technology can be used to unlock full communication and access and to break down barriers.

Some early wearable prototypes have been made to attempt to translate American Sign Language (ASL), but since the language uses facial expressions, body language and even depth of sign to convey meaning, sign to speech is tricky.